The world wide web has made great strides since its inception. It has revolutionized the way we live, work, and socialize, but along with its many benefits, it has also given rise to a unique, less-touted facet – online harassment. More recently, equipped with advances in artificial intelligence (AI), new threats have emerged on the digital landscape. Deepfake technology, in particular, is pushing the frontiers of online harassment, creating ripples of concern among academics, lawmakers and the public alike.

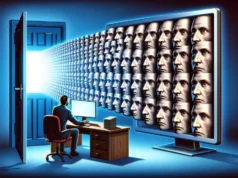

Deepfake technology entails the use of machine learning algorithms to create convincingly realistic, digitally altered videos or images that make people appear to do or say things they never did. This relatively nascent technological phenomenon has swiftly turned into a powerful tool of manipulation and deception, providing new ammunition for cyberbullies and deepening the severity of online harassment.

The origins of deepfake technology can be traced back to academia. Researchers started developing algorithms that could learn how to ‘morph’ one image into another as early as 1997. Deepfake, though, draws its moniker and insidious connotations from a Reddit user who, in 2017, shared altered images of celebrities’ faces superimposed on pornographic actors, sparking widespread condemnation.

Deepfake’s troubling potential for misuse has been thrust into the limelight since then. The intrusive and slanderous nature of deepfake technology has introduced unprecedented challenges related to consent, privacy, and authenticity, taking the issue of online harassment to dangerous new heights.

In a preliminary study conducted by cybersecurity company Deeptrace, over 14,000 deepfake videos were found circulating on the internet in 2019, a number that doubled in less than nine months. Deeptrace also noted that non-consensual pornographic content accounted for 96% of the total deepfake videos online, indicating rampant misuse of the technology.

In the murky world of online harassment, deepfakes could greatly exacerbate existing issues. With victims predominantly being women, they offer perpetrators the opportunity to shame, blackmail, and inflict psychological harm without even needing actual compromising material. Can the law keep up?

Bobby Chesney and Danielle Citron, law professors at the University of Texas at Austin and Boston University respectively, explore this dilemma in their jointly authored paper. Here, they proffer that current laws are ill-equipped to handle the fallout from deepfakes, given that they straddle multiple grey areas concerning defamation, privacy, and the right to one’s image.

Even when laws do exist, the ability to remain anonymous online poses a significant hurdle in enforcing them. Those victimized by deepfakes often have little recourse, as identifying perpetrators – let alone holding them accountable – remains immensely challenging.

In addition to its disturbing potential for personal harassment, deepfakes also pose significant threats on a larger scale. They can be used for political manipulation, misleading propaganda, and even spark international conflicts. Frighteningly, it is becoming increasingly difficult to detect these forgeries as AI keeps improving, making these threats all the more potent.

Leading tech companies like Google, Facebook, and Twitter have been grappling with the deepfake menace. Last year, Google released a large dataset of visual deepfakes as part of its efforts to boost research into detection techniques. Similarly, Facebook has set aside substantial funds for its Deepfake Detection Challenge, aimed at encouraging developers across the globe to create innovative solutions to counter this problem.

For now, the fight against deepfakes is far from over. It demands concerted global efforts coupling legal, technical, and societal solutions. As we navigate this new frontier of online harassment, it’s critical that we stay informed and continue to question what we see online.

In this digital era, it’s key to remember: Seeing is no longer believing.

Sources:

1. DeepTrace: “The State of Deepfakes – Landscape, Threats, and Impact”.

2. Bobby Chesney and Danielle Citron, Texas Law Review: “Deep Fakes: A Looming Challenge for Privacy, Democracy, and National Security”.

3. Google AI Blog: “Contributing Data to Deepfake Detection Research”.

4. Facebook AI: “The Deepfake Detection Challenge (DFDC)”.

5. Reddit.com.